Is aI Hitting a Wall?

페이지 정보

본문

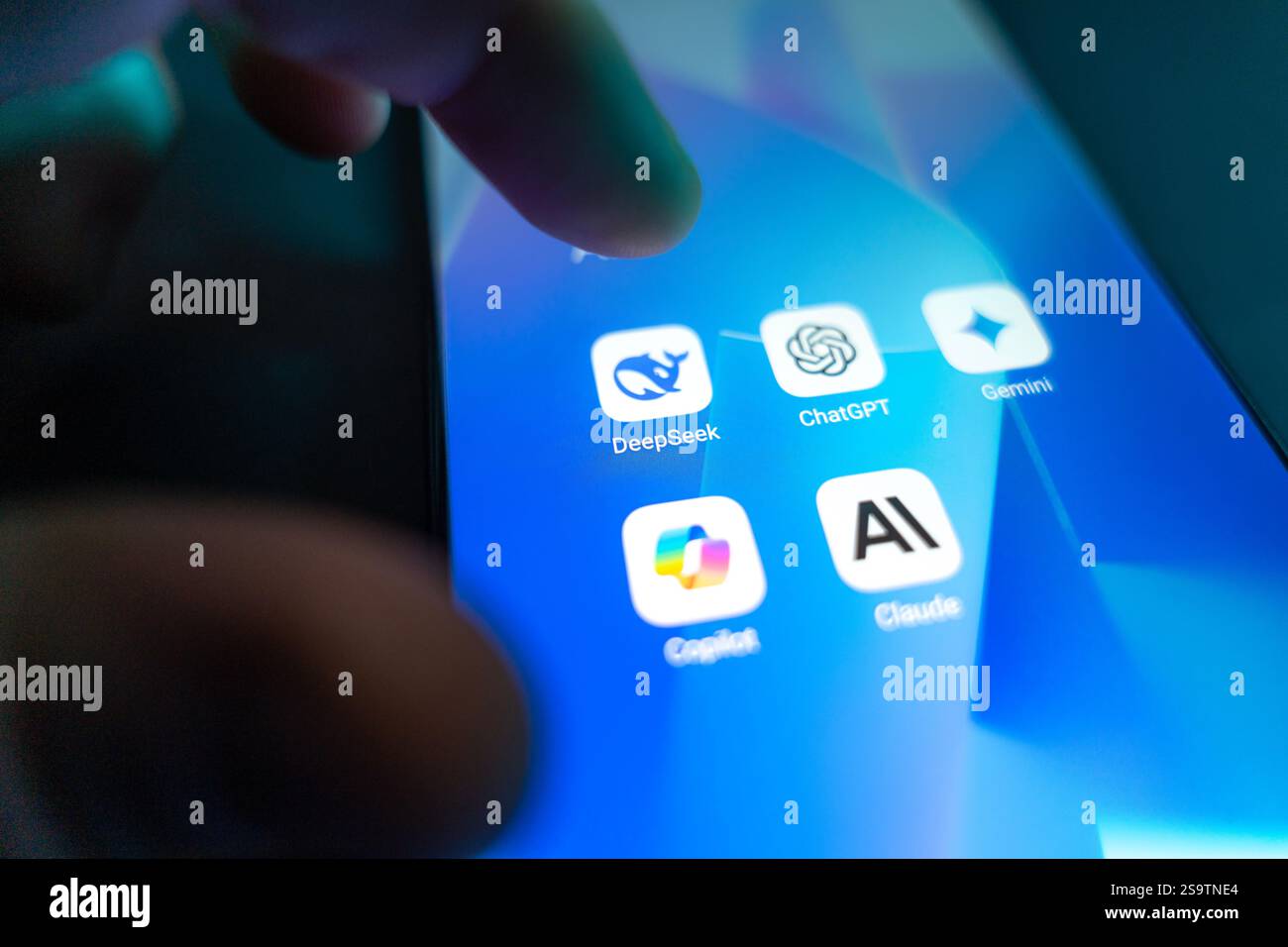

As an open-source model, DeepSeek V3 represents just the beginning of a new era in AI accessibility and performance. Note that we didn’t specify the vector database for one of many models to match the model’s efficiency against its RAG counterpart. This can be a noteworthy achievement, because it underscores the model’s capacity to learn and generalize successfully via RL alone. R1 is akin to OpenAI o1, which was released on December 5, 2024. We’re talking a couple of one-month delay-a brief window, intriguingly, between leading closed labs and the open-source group. A short window, critically, between the United States and China. Does China aim to overtake the United States in the race towards AGI, or are they transferring at the required tempo to capitalize on American companies’ slipstream? The question on the rule of regulation generated the most divided responses - showcasing how diverging narratives in China and the West can affect LLM outputs.

When DeepSeek skilled R1-Zero they found it hard to learn the responses of the model. That’s R1. R1-Zero is identical thing but without SFT. DeepSeek needed to maintain SFT at a minimal. That’s what DeepSeek attempted with R1-Zero and nearly achieved. But still, the relative success of R1-Zero is spectacular. The previous is shared (both R1 and R1-Zero are primarily based on DeepSeek-V3). There are two colleges of thought. There are no public experiences of Chinese officials harnessing DeepSeek for personal information on U.S. The reversal of policy, practically 1,000 days since Russia started its full-scale invasion on Ukraine, comes largely in response to Russia’s deployment of North Korean troops to complement its forces, a improvement that has brought on alarm in Washington and Kyiv, a U.S. Nvidia's inventory plummeted practically 17%, the largest single-day loss in U.S. Did they find a solution to make these fashions extremely low cost that OpenAI and Google ignore? Let’s review the elements I discover extra interesting.

When DeepSeek skilled R1-Zero they found it hard to learn the responses of the model. That’s R1. R1-Zero is identical thing but without SFT. DeepSeek needed to maintain SFT at a minimal. That’s what DeepSeek attempted with R1-Zero and nearly achieved. But still, the relative success of R1-Zero is spectacular. The previous is shared (both R1 and R1-Zero are primarily based on DeepSeek-V3). There are two colleges of thought. There are no public experiences of Chinese officials harnessing DeepSeek for personal information on U.S. The reversal of policy, practically 1,000 days since Russia started its full-scale invasion on Ukraine, comes largely in response to Russia’s deployment of North Korean troops to complement its forces, a improvement that has brought on alarm in Washington and Kyiv, a U.S. Nvidia's inventory plummeted practically 17%, the largest single-day loss in U.S. Did they find a solution to make these fashions extremely low cost that OpenAI and Google ignore? Let’s review the elements I discover extra interesting.

Soon, they acknowledged it performed extra like a human; beautifully, with an idiosyncratic style. Neither OpenAI, Google, nor Anthropic has given us one thing like this. At only $5.5 million to train, it’s a fraction of the cost of fashions from OpenAI, Google, or Anthropic which are sometimes within the tons of of tens of millions. All of that at a fraction of the cost of comparable models. Talking about costs, by some means deepseek ai has managed to build R1 at 5-10% of the price of o1 (and that’s being charitable with OpenAI’s input-output pricing). These challenges suggest that reaching improved performance often comes on the expense of effectivity, resource utilization, and price. Mathematical: Performance on the MATH-500 benchmark has improved from 74.8% to 82.8% . If I had been writing about an OpenAI mannequin I’d have to finish the post here because they only give us demos and benchmarks. Wasn’t OpenAI half a year ahead of the remainder of the US AI labs?

And multiple 12 months forward of Chinese firms like Alibaba or Tencent? More on that quickly. Second, we’re studying to make use of synthetic information, unlocking much more capabilities on what the mannequin can truly do from the info and models we now have. No human can play chess like AlphaZero. But let’s speculate a bit more here, you recognize I like to try this. More importantly, it didn’t have our manners both. What if-bear with me right here-you didn’t even need the pre-coaching section in any respect? III. What if AI didn’t need us humans? Instead of showing Zero-sort fashions thousands and thousands of examples of human language and human reasoning, why not educate them the essential guidelines of logic, deduction, induction, fallacies, cognitive biases, the scientific methodology, and normal philosophical inquiry and allow them to discover better ways of considering than people could by no means give you? Let me get a bit technical right here (not much) to explain the difference between R1 and R1-Zero.

If you have any thoughts regarding where by and how to use ديب سيك, you can speak to us at the website.

- 이전글What's The Current Job Market For Electric Stoves Fires Professionals? 25.02.03

- 다음글3 Gpt Ai April Fools 25.02.03

댓글목록

등록된 댓글이 없습니다.